Summary:

Academics, experts, and laypeople often assume stereotypes about groups are inaccurate. This assumption is used to justify policies meant to reduce or eliminate such beliefs.

Most stereotypes that have been studied have been shown to be approximately correct. Usually, stereotype accuracy correlations exceed .50, making them some of the largest relationships ever found in social psychology.

Even when people hold true stereotypes, they have little effect on how people judge or treat individuals about whom they have other, individualized information.

Unlike most findings in social psychology that are small and flimsy, the results noted above are clear, large, reliable, and untouched by the replication crisis.

The field of stereotype accuracy casts doubt on the usefulness of programs meant to reduce stereotypes in education, government, and business as a way to achieve equality.

Introduction

A recent paper titled The Misperception of Racial and Economic Inequality has gotten a lot of attention. It has received over 40 citations, despite being out for less than two years. The work was highlighted at a major professional social psychology conference,1 and was even featured on Twitter by a prominent member of the science reform movement in psychology.2 Why? It has shown quite clearly that many people, including those surveyed in a large representative sample, drastically underestimate the Black/White wealth gap. It shows this by comparing people’s perceptions of the gap to federal data on the actual gap.

The paper makes an interesting point: People’s perceptions of racial wealth inequality are largely inaccurate. As such, the finding is doubly interesting: It is consistent with the longstanding claim in the social sciences that stereotypes are inaccurate, yet it is inconsistent with the longstanding claim that stereotypes typically exaggerate real differences. In this case, they underestimate real differences.

But this paper raises some additional issues. Are stereotypes usually inaccurate, or are perceptions of wealth an outlier? Perhaps even more important, it shows that a claim that is often informed by ideological agendas and activism – that stereotypes are inaccurate – is actually transformable into an empirical question that is readily answerable by social science. The recipe is simple:

Assess people’s beliefs about one or more groups.

Identify credible criteria for what those groups are actually like.

Compare the two.

Once the door is open to addressing some question scientifically, sometimes, the answers may not be what people expect or want. Of course, there are good reasons for stereotypes’ bad reputation: some stereotypes are malevolent and destructive, and have been exploited for propaganda purposes for generations. Each of these were once common and some can still be found today:

Women as fit for nothing but child-rearing and homemaking.

Arabs and Muslims as nothing but bloodthirsty terrorists.

Jews as grasping hook-nosed Nazis perpetrating genocide on innocent Palestinian babies.

Such characterizations are inaccurate, immoral, and repulsive, to say the least. As malevolent as these images may be, they say nothing about the accuracy of what everyday people think of women, Jews, Muslims, or any other group. Here is a secret which, if you go by their behavior, many social scientists and academic intellectuals seem to either not know or forget when the topic of stereotypes comes up: If you want to know what people think about something, you cannot go by television commercials, political cartoons, or characters in movies; it is far more useful to ask them. This is not a silver bullet, because answers depend on how the questions are asked, and people are indeed subject to many biases and distortions. However true this may be, things that cannot possibly reflect their personal beliefs, such as movies or political cartoons, are pretty obviously absurd substitutes for figuring out what people believe. Which raises the question: what do people actually believe about groups, and are those beliefs inaccurate?

A Simple Test

Before continuing, therefore, please take the following quiz:

1. Which group is most likely to commit murder?

A) Men

B) Women

2. Older people are generally more __________ and less __________ than adolescents.

A) Conscientious; open to new experiences

B) Neurotic; agreeable

3. In which ethnic/racial group in the U.S. are you likely to find the highest proportion of people who supported Democratic presidential candidates in 2008 and 2012?

A) Whites

B) African Americans

4. People in the U.S. strongly identifying themselves as ___________ are most likely to attend church on Sunday.

A) Conservative

B) Liberal

5. On December 24, 2004, a dad and his three kids wandered around New York City around 7 pm, looking for a restaurant, but found most places closed or closing. At the same time, his wife (their mom) performed a slew of chores around the house. This family is most likely:

A) Catholic

B) Baptist

C) Jewish

D) Pagan/Animist

Answers appear at the bottom of this paragraph. If you got three or more right, congratulations – your stereotypes assessed here were quite accurate. On the other hand, don’t be too impressed with yourself. Lots of people hold stereotypes about as accurate as yours. And yet, most of us have had it beaten into our heads that ‘stereotypes are inaccurate.’ Why is that? (The answers are: A, A, B, A, C.)

Activism Versus Evidence

It is very hard to have a serious, scientific, evidence-based discussion about stereotypes because the term itself is imbued with malice and delusion. People generally presume that stereotypes are irrational, invalid, and practically synonymous with prejudice. It’s even hard to have a serious discussion about stereotypes with scientists. Many have been deeply concerned with combating oppression – anti-Semitism in the immediate aftermath of the Second World War, racism and sexism following the civil rights and women’s movements in the ’60s, and, more recently, various movements for social justice for all sorts of marginalized groups. Many scientists erroneously believe that since stereotypes have been used to incite hatred, ostracism, and even genocide, they must also be false (though this doesn’t follow logically).

As a result, stereotypes have a terrible reputation. If you make almost any claim about almost any group, the quickest way to have your claim dismissed and delegitimized is for someone to declare: ‘That’s just a stereotype!’ This ‘works’ because of the widespread assumption that stereotypes are inaccurate, and the unstated insinuation that you are probably a bigot. Thus, any evaluation of the validity of your claim is short-circuited by implied impugnment of your moral character as depraved.

Still, high moral purpose (or lack of it) does not translate to scientific truth (or its absence). We do not usually presume people making other generalizations – say, about the weather in Anchorage or the taste of cherries– are doing something bad and inaccurate. Despite this obvious point, the notion of generalizations about people as inherently bad and inaccurate has long been baked into the science without much scientific support.

The Black Hole at the Bottom

One of us (Jussim) began research on stereotypes in the early 1980s. At the time, there were no reasons to doubt the widespread belief in stereotype inaccuracy. As a grad student, I (Jussim) sought to track down the evidence supporting those claims – not to refute them, but to promote them and proclaim to the world the hard scientific data showing that stereotypes were wrong. So, when some published article cited some source as evidence that stereotypes were inaccurate, I would track down the source hoping to get the evidence.

And, slowly, over many years, I made a disturbing discovery. There was just about no there there. Claims of stereotype inaccuracy were often literally based on nothing. For example, a classic paper from 1977 describing research by social psychologists Mark Snyder, Elizabeth Tanke, and Ellen Berscheid stated: “Stereotypes are often inaccurate.”3 Ok, but scientific articles are usually required to support such claims, typically via a citation to a source providing the evidence. There was no source there. Obviously, it could still be based on something unarticulated, but scientists cannot be in the business of making claims up out of whole cloth. If no evidence or citation to evidence is provided, the article literally provides no evidence to support that claim.

This pattern is pervasive in the scholarly literature on stereotypes, but it comes in two flavors. Therefore, we have given it two names, for slightly different manifestations of essentially the same idea. The black hole at the bottom of declarations of stereotype inaccuracy refers to the following pattern that almost anyone can see for themselves when reading the scholarly literature on stereotypes. When an article declares a stereotype to be inaccurate, it often provides no scientific citation whatsoever (it’s an evidence-free declaration). It’s just a black hole. Try it. Next time you read a social science article declaring stereotypes to be inaccurate, be alert – do they report original evidence, or, if not, do they cite another article as providing that evidence? If not, then you have found one of the stereotype inaccuracy black holes.

Idea Laundering

The second flavor of claims unhinged from actual evidence is “idea laundering” (a term we did not coin).4 It refers to a process that can create the appearance of scientific legitimacy on the basis of little or no empirical evidence supporting the claim. It works like this: some academic makes some claim with little or even no evidence in an academic journal. It might even be flagged as speculation. Nonetheless, another academic finds the idea useful and cites that article. Now there are two published articles touting the idea. Rinse and repeat for scores or even hundreds or sometimes thousands of articles, and you have a “scholarly consensus” spring up around an entirely speculative or unproven idea giving it the false appearance of “fact” or “knowledge.” Wikipedia calls the same phenomenon the “Woozle effect,” named after Winnie-the-Pooh’s quest to find a non-existent animal by following tracks that he was unaware of having made himself previously.5

Many researchers cite social psychologist Gordon Allport’s book, The Nature of Prejudice (1954) in support of the claim that stereotypes are inaccurate, or, at least, exaggerations of real differences. And Allport did declare that stereotypes exaggerated real differences. But, aside from an anecdote or two, which is hardly scientific evidence, he presented no evidence that they actually did so. Famous psychologists declaring stereotypes inaccurate without a citation or with a citation to a source that itself provided no evidence meant that anyone could do likewise, creating an illusion that pervasive stereotype inaccuracy was ‘settled science.’ Of course, if “settled science” means “most scientists believe this is true,” it is settled science in a social sense, but not because it is actually true. Only if one looked for the empirical research underlying such claims can one discover that there is nothing there.

There is, however, another alternative which, at first glance, might appear to “save” the ability to declare stereotypes to be inaccurate. Perhaps those who make this declaration are not stating an empirical description of the world. Instead, perhaps they are simply defining stereotypes as inaccurate. Anyone can define their terms pretty much how they choose. If a unicorn is defined as a supernatural animal that looks a lot like a horse with a horn, that is not a description of the empirical state of the world (which has no unicorns); it is a definition of the word “unicorn.”

But there are two problems with defining stereotypes as inaccurate. The first is that if all beliefs about groups are stereotypes, and all stereotypes are defined as inaccurate, then all beliefs about groups are inaccurate. It is, however, logically impossible for all beliefs about groups to be inaccurate. This would make it ‘inaccurate’ to believe either that two groups differ or that they do not differ, and both cannot possibly be inaccurate. The idea that ‘all beliefs about groups are stereotypes and all are inaccurate’ can be summarily dismissed as logically incoherent.

There is, however, a second way to define stereotypes as inaccurate that solves this incoherence problem but brings in another. Perhaps stereotypes are the subset of beliefs that are inaccurate. In this case, only inaccurate beliefs are stereotypes; accurate beliefs about groups may exist, but they are not stereotypes. This solves the incoherence problem that comes from “all beliefs about groups are inaccurate” but produces a new one.

If stereotypes are the subset of beliefs about groups that are inaccurate, before declaring some belief to be a stereotype one would need to first empirically establish that that belief is inaccurate – otherwise, one could not know that it is a stereotype. The logic is inexorable, as can be seen from any example outside of stereotypes. If one declares that Covid is caused by a novel coronavirus, one cannot assume a patient with pneumonia has Covid without testing for Covid (the person might have a bacterial infection or an infection from a different virus). In the case of Covid, you need a Covid test. Similarly, one can define a “fatal transmission failure” as a car failing to move even though the engine is on due to clutch slippage. However, just because a car can’t move does not mean there has been a transmission/clutch failure. One needs to evaluate whether the clutch is actually slipping, because “not moving” can come from several things besides clutch failure. This is all obvious.

Amazingly, what is obvious with infection-testing and car failure all-of-a-sudden ceases to be obvious to many people, including many with PhDs, when it comes to stereotypes, even though the logic is identical. One might think a college education—particularly an advanced, graduate school education—would provide training in logic and inference that would ensure this would be obvious. If one thought this, one would be obviously wrong. If one uses this meaning of stereotype inaccuracy, one needs to show that a particular belief about a group is inaccurate before one can refer to it as a stereotype. Absent evidence of inaccuracy, one cannot know the belief is inaccurate; therefore, one cannot know it is a stereotype.

Vanishingly few social scientists performing research that refers to stereotypes ever present such a test. If this is the definition, most claims about “stereotypes,” including most social science scholarship, would need to be dismissed because almost none of it actually first showed the beliefs in question to be inaccurate. The need to dismiss the work would stem from the fact that whenever a belief is not shown to be inaccurate, it cannot be known to be a stereotype (if one uses this definition). Of course, all of these problems can be easily solved by using a definition that is neutral with respect to inaccuracy. Our definition of “stereotype” is simply “a belief about a group.” It may be right, wrong, partially right, moderately right, or any other combination or complex pattern of right, wrong, close, etc. On the other hand, a neutral definition removes social justice-oriented activists' justification for presuming that stereotypes are inaccurate, and this may be a price many are unwilling to pay for mere logical coherence.

The Evidence on the Accuracy of People’s Beliefs about Groups is Clear

When stereotypes are defined neutrally, accuracy is an empirical question. If one wanted to know the accuracy of the weather forecast, say, with respect to predicting tomorrow’s high temperature, one would:

Identify the prediction.

Measure tomorrow’s high temperature.

Compare the two.

One can do this in many ways. One could literally do it for tomorrow by computing a discrepancy score. If a person’s prediction is that the high will be 65 degrees, it either is or it is not. We call these personal discrepancies because they assess the accuracy of a single person. But what if it’s 64 degrees? Is this one-degree discrepancy ‘wrong’ or is it ‘close enough’? This is a matter of individual judgment and probably depends on the context. For most casual purposes, we suspect most people would consider 64 “close enough.”

Another thing one can do is compare predictions across many days; one can correlate a person’s predictions with the daily highs over many “tomorrows.” The higher the correlation, the more that variations in predictions correspond to variations in the actual temperatures. We call these personal correlations because they constitute the correlational accuracy of a single person. One can also assess the accuracy of consensual beliefs about daily high temperatures; these constitute the beliefs held by many people together.

One could ask many people to predict them, and then compute the average prediction. One can then compute a consensual discrepancy score (by comparing the average prediction to the actual temperatures) or a consensual correlation, by correlating the average predictions with actual high temperatures over many “tomorrows.”

Now let’s return to stereotypes. These empirical tests accomplish different things, depending on what one means when one declares stereotypes to be inaccurate. If this is an empirical claim, then the empirical evidence can falsify or support this claim. If one defines stereotypes as the subset of beliefs about groups that are inaccurate, then the empirical evidence indicates whether any particular belief is (if it is inaccurate) or is not (if it is accurate) a stereotype.

So what do the data say? Over 50 studies have now been performed assessing the accuracy of people’s beliefs about demographic, national, political, and other groups. The evidence is clear.6 Based on rigorous criteria, laypeople’s beliefs about groups correspond well with what those groups are really like. This correspondence is one of the largest and most replicable effects in all of social psychology. Stereotype accuracy has been obtained and replicated by multiple independent researchers studying different stereotypes and using different methods all over the world. Table 1 is based on our 2016 review of all studies of stereotype accuracy we could find up to that time.7

Table 1 shows that stereotypes are more accurate than most social psychological hypotheses. We can confidently say this, because a large meta-analysis of just about the entire literature in social psychology up until about 2000 found that, across all topics, the average effect size in social psychology was about r=.20.8 As shown in Table 1, only about 24% of effects in social psychology exceed r=.30, and only 5% exceed r=.50. In contrast, most stereotype accuracy correlations exceed .50, making them some of the largest relationships ever found in social psychology.

For example, way back in 1978, in a study reported in the Journal of Personality and Social Psychology, social psychologists Clark McCauley and Christopher Stitt first obtained U.S. census data comparing African Americans and other Americans on the likelihood of completing high school or college, becoming an unwed mother or unemployed, and on having a family with four or more children.9 They then asked people from various walks of life – college and high school students, union members, a church choir, master’s of social work students, and caseworkers in a social service agency – about their beliefs about the percentages of Americans in general, and African Americans in particular, with these characteristics. People’s estimated percentages were close to the census data, and correlated extremely highly with the actual differences.

People are also quite good at perceiving many gender differences. By the 1990s, several meta-analyses of sex differences had been published. Much as McCauley and Stitt started with U.S. census data, social psychologist Janet Swim, in two studies reported in a 1994 issue of Journal of Personality and Social Psychology, took this meta-analytic research as her starting point – as criteria for real sex differences.10 The meta-analyses showed, for example, that males outperform females on math tests, and are more restless and aggressive, whereas females are more influenced by group pressure and are more skilled at decoding nonverbal cues. She then asked people to estimate the size of these differences; again, people were quite good, and their estimates again were highly correlated with the actual differences. Similar results have been found for all sorts of other stereotypes, including those about ethnic groups, age, occupational groups, college majors, and sororities.

Despite the frequency with which researchers proclaim the inaccuracy of stereotypes, we are regularly accused of debunking a straw man argument when we contest this claim. In reviews of articles submitted for publication, we were regularly told variants of “Modern scientists do not broadly declare stereotypes to be inaccurate.” So we did a small-scale study, a content analysis of how several famous textbooks and influential books treat stereotypes. Results are summarized in Table 2. In our home discipline of social psychology, stereotypes are not always declared inaccurate, but a slew of famous canonical texts either do so or emphasize inaccuracy without ever mentioning accuracy.

Some Exceptions and Qualifications

Inasmuch as over 50 studies of stereotype accuracy have been performed, it is fair to say that, in general, they found that the correlations between people’s stereotypes and criteria were some of the largest relationships ever found in the social sciences, ranging from about .4 to .9 (the average correlation in social psychology is about .2). This is surely because, whatever their limitations, motivations, and biases, people have some sensitivity to actual realities, at least most of the time, and at least if those realities are relatively apparent.

There are, however, two important limitations to this work. First, some stereotypes have been found to have very little accuracy, although they are probably not among the first things that come to mind when most people think about “stereotypes.” Political stereotypes tend to have high correlational accuracy because people have a good general sense of the direction of differences between Democrats and Republicans, or liberals and conservatives. However, they also consistently exaggerate the real differences. This probably occurs for several reasons, but the most crucial ingredient seems to be that people caricature their opponents. Colloquially speaking, the further people are on the left, the more they see those on the right as fascists and Nazis, and the further people are on the right, the more they see those on the left as Marxists and communists. It may not always be quite that extreme, but the point is that people exaggerate real political differences primarily by distorting their opponents as being more extreme than they really are.11

There is one type of stereotype, however, that the bulk of the research shows to be inaccurate – national stereotypes of personality. A slew of studies have administered standard personality inventories to people on every continent, except Antarctica, and those results were then used as the criterion against which to compare people’s beliefs about, for example, the openness or agreeability of people in those countries. The most common finding is that people’s perceptions are almost completely unrelated to the actual personality scores. Studies have focused mainly on assessing levels of accuracy rather than explaining inaccuracy, so we do not really know why this occurs. It does seem plausible, though, that few people have extensive experiences with large swaths of people from other cultures and countries. If stereotypes are, at least sometimes, based on realities, but people have little or no access to those realities, there is no reason to expect much accuracy in such stereotypes.

Last, as we described in the introduction, Americans’ beliefs about differences in wealth between White and Africa Americans wildly underestimate real wealth differences. We suspect that this is mostly because people do not understand that most wealth comes from home ownership.

So, even though most of the research finds stereotypes of the people studied are fairly accurate, there are some notable exceptions. In addition, there are other reasons not to run screaming to the world that “all stereotypes are accurate!” (over and above that not being true to the evidence). All studies are limited. All stereotypes have not been studied. All people’s stereotypes have not been studied. There are no good criteria against which to compare many stereotypes. Therefore, it is possible that, over the coming decades, more and more evidence of inaccurate stereotypes will emerge. Or perhaps a particular belief is one that has never been evaluated for accuracy.

Another important limitation is that some stereotypic beliefs have no accuracy standard. For example, sometimes, people consider role prescriptions to be stereotypes (“children should be seen and not heard”). What people “should” do is a moral question, and there are no accuracy criteria identifying what the right morals for people to hold are. Therefore, one cannot evaluate the accuracy of prescriptive stereotypes.

People also may hold stereotype beliefs about group differences that have never been studied. Are New Yorkers really louder than other people? We doubt there is any good data on this. Absent such data, it is impossible to evaluate the accuracy of someone who believes New Yorkers are unusually loud. Of course, this cuts two ways. We certainly cannot say such beliefs are accurate, but nor can we declare them to be inaccurate, and any person who does is making an unjustified claim.

Another qualification to this line of work stems from confounding accuracy in perceptions of some characteristic with their explanation for that difference. Let’s say some people believe men are more interested in STEM careers than are women. This is either true or not at any given point of time for any particular sample of men and women. Determining whether this belief is correct provides no information whatsoever about the explanations for this difference or nondifference. This should be obvious. Establishing that something is true is very different from establishing why it might be true.

What About Biases in Evaluating Individuals?

One common form of pushback to this line of argument is that while people’s beliefs about groups may not be completely out of touch with reality, they may inaccurately judge individuals. It is true that, in general, in the absence of lots of detailed and relevant information about a person, people’s stereotypes do bias their judgments. Of course, any Bayesian, unless afraid of political blowback, will tell you that this is completely rational – “priors” (also known as beliefs and expectations) should influence judgments under uncertainty, in the absence of evidence to the contrary. So, the more important question is, ‘When contrary information becomes available, do people rigidly stick to their stereotypes or do they adjust their perceptions and judgments accordingly?’

The answer is clear, though with a bit of nuance. Scores of studies show a consistent pattern: People generally judge others on their merits and the extent to which they do this is very powerful. Reliance on individuating information averages about r=.70, which is also one of the largest effects in social psychology. Judging people on their merits is a pattern so powerful that a 1996 review and meta-analysis by social psychologist Ziva Kunda and cognitive scientist Paul Thagard described those effects as “massive.”12

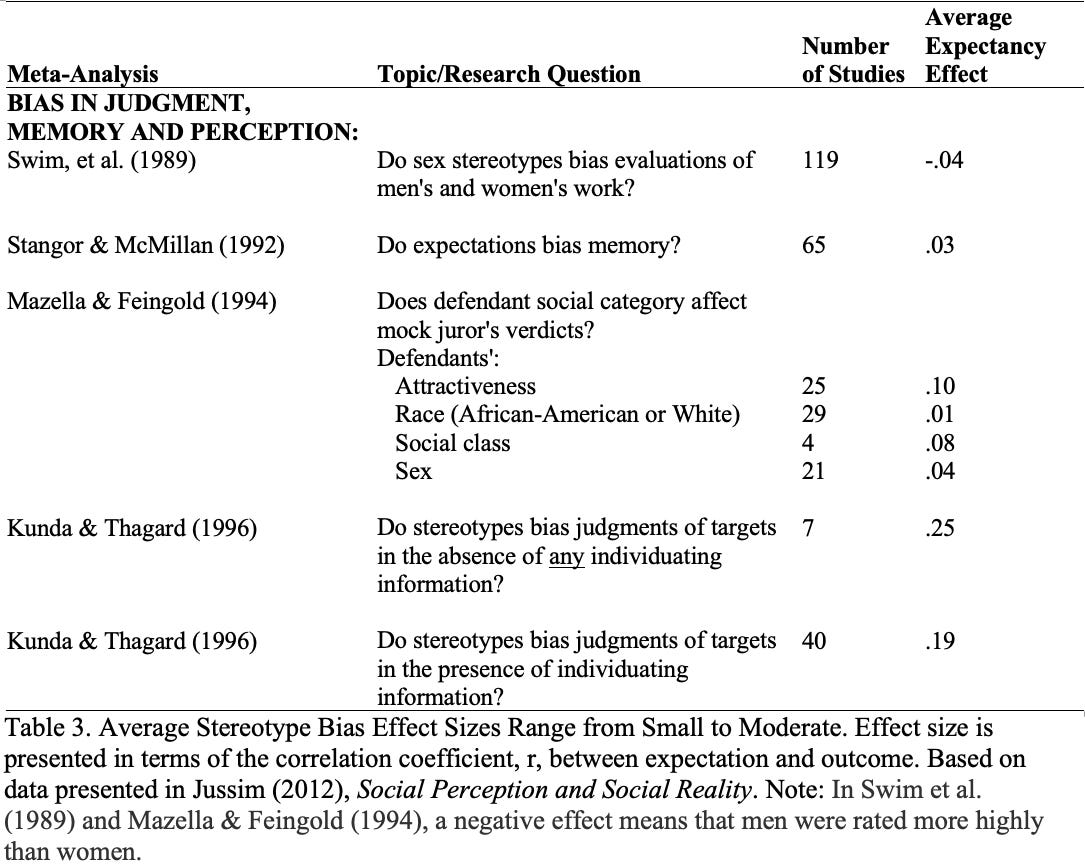

Of course, just because people almost entirely judge others on their merits does not mean that stereotypes have no biasing effects. This is where some nuance is required. Even in the presence of relevant individuating information, stereotype biases average quite small, often around zero. Table 3 presents the average bias effects found in a slew of meta-analyses of studies of the role of stereotypes in person perception, and is based on data presented in Social Perception and Social Reality: Why Accuracy Dominates Bias and Self-Fulfilling Prophecy (Jussim, 2012). The simple average for the overall effect of stereotypes on person perception, across nearly all the studies of stereotyping that have been performed, is a correlation (between target group label and perceiver judgment of an individual) of .10. Furthermore, that is the simple, unweighted average, so is probably an overestimate, because the correlation of the bias effect with the number of studies included in each meta-analysis shown in Table 3 is -.39. The more studies in the meta-analysis, the smaller the average biasing effect of stereotypes. This suggests the existence of bias in favor of publishing studies demonstrating bias, which declines as more studies get published.

Why biases sometimes do versus do not occur has not been well-established by scientific research (there are lots of different explanations and theories out there that are beyond the scope of this report). Interestingly, despite the current craze over implicit biases, work in our lab shows essentially the same pattern: even for implicit perceptions (those measured by reaction time measures rather than questionnaires), people rely heavily on individuating information, and far less, or not at all, on their own stereotypes.13

Why Should Anyone Care?

There are several reasons why we think people should care about this state of affairs, ranging from scientific to political to redressing social problems.

The Priority of Truth-Seeking

Our view is that science’s first goal should be to find out things that are actually true. Prioritizing anything else is not science. This may be difficult, and there are often wide swaths of uncertainty surrounding much work on social science, especially on controversial topics. Therefore, scientists should exercise due caution with respect to canonizing conclusions. Study X may find something, but most likely, we should not be singing our findings to the world as “confirmed scientific fact” until a skeptical community of scientists independent of the original team find the same thing. With all those as qualifiers, our primary goal should still be truth.

In this context, the conclusions currently justified, based on the overwhelming weight of the evidence are that:

The overwhelming majority of studies of demographic stereotypes find moderate to high accuracy.

Most studies of national character stereotypes have found them to be inaccurate.

No general tendency of stereotypes to exaggerate real differences between groups has yet been discovered.

There is a general tendency, confirmed by multiple independent teams, for political stereotypes to exaggerate real differences.

Distinguishing Accurate from Inaccurate Beliefs

On the one hand, one cannot simply declare all beliefs about groups to be inaccurate. On the other, not all stereotypes are accurate. Therefore, in order, to identify when inaccurate stereotypes may or may not be contributing to social problems, one needs to assess their accuracy. Sometimes, this will produce evidence of inaccurate stereotypes that could contribute to social problems; other times, it will show the stereotypes to be relatively accurate.

Political Dysfunctions in Academia

Demonstrating bias, at least when there are accuracy criteria, is relatively straightforward: One must show that beliefs systematically deviate from the criteria. It is not enough to show simply that people perceive differences between groups or individuals; those differences may be valid. This is completely obvious in many situations outside of stereotypes. And yet, when people perceive differences between groups, this is routinely attributed to “bias” even without bothering to assess for accuracy. That laypeople routinely do this is bad enough, but it’s common among academics as well. Why might this be?

As Jonathan Haidt argued in The Righteous Mind, ideology “binds and blinds.” If the only possible explanation for a group difference one has ever considered is “bias,” well, then, of course it would never occur to one to even consider accuracy. Furthermore, for socially valued characteristics, group differences mean that one group has more than the other. Even if social processes produced those differences, the mere acknowledgment of their existence may be viewed as “blaming the victim” – something taboo in politically left and academic circles. It is so taboo that, even if one is not blaming the victim, many may decide that the risk of social costs of considering non-bias explanations for differences are not worth incurring, often because there is a heightened risk of others misrepresenting one as having blamed the victim.

Policy and Social Problems

Policies are not likely to be effective if they target the wrong problem. For example, scientists once believed that “bad humors” caused fevers, so they would bleed sick people to cure them. They also once believed that stress caused ulcers, so a whole industry was built around addressing stress (for the uninitiated, Barry Marshall received the Nobel for showing that bacteria, not stress, caused ulcers).

In the same spirit, targeting changing stereotypes to solve inequality and injustice is not likely to be very effective if most of the stereotypes are fairly accurate. For example, we have argued that whatever is captured by measures usually referred to as “implicit bias,” using methods such as the implicit association test, often, in substantial part, reflect social realities.14 This may help explain why interventions designed to change implicit biases have virtually no effect on discriminatory behavior.

In contrast, let’s say some sort of social process caused some sort of unjust inequality. Purely hypothetically, let’s say there are two ethnic groups, A and B. In 2021, although there is little individual-level discrimination, A’s live in rural areas and B’s live in suburban areas. Social and political processes over the prior 100 years have produced better suburban than rural public schools. Thus, B’s do better in school, and are more likely to go to college and get good jobs.

Thus, there clearly is an inequality, it is socially valued and important, and we may consider it to be unjust. The solution here starts with acknowledging, and being able to publicly discuss, the A-B difference in life outcomes without fear of being denounced and blacklisted. But that is just the start. If one leaped to “discrimination” as the source in this hypothetical, one would be wrong because it does not exist.

With that in mind, we make the following recommendations:

For those who consider stereotypes to be any beliefs about groups, stop declaring them to be inaccurate.

For those who consider stereotypes to be “the subset of beliefs about groups that are inaccurate,” feel free to describe national character stereotypes, and American’s beliefs about wealth differences between Black and White people as inaccurate. There are no other stereotypes that, by this definition, one can currently refer to as inaccurate.

No one should be (as have many social scientists) declaring that “stereotypes exaggerate real differences.” This is, itself, wildly exaggerated because, though sometimes stereotypes exaggerate real differences, just as often, or more so, they underestimate real differences.

Evaluate claims on their scientific merits and validity, not on their usefulness for advancing political agendas.

Why the Deep Disconnect Between the Evidence and the Canon?

We know that academic perspectives emphasizing stereotype accuracy are wrong. And they are not wrong in random ways. Perspectives relentlessly emphasizing stereotypes are systematically biased in the sense that they ignore evidence of accuracy and rational and unbiased judgments regarding individuals. So having established bias, now we can ask, “Why are the academics so biased?”

Of course, we cannot know for sure, because no study has directly linked researchers’ personal characteristics to the biased conclusions they reach in their scholarship. Nonetheless, Occam’s razor – keeping it as simple as possible – suggests that the short answer is that many academics study topics to correct what they see as societal ills; that is, they prioritize activism and their view of social justice over truth. Given the massive evidence showing a massive left skew of academia, especially in the social sciences and humanities (the areas most likely to address politicized topics), academic activism is essentially an intellectual form of leftwing activism.

This can be seen in a myriad of ways. CSPI’s new report shows a large portion of academics surveyed in the social sciences and humanities in the U.S., Canada, and the U.K. see themselves as activists, radicals, or both.15 This group is most likely to endorse attempts to sanction colleagues who express dissenting views (e.g., by getting them fired), and discriminating against people whose views they oppose. Although it was not addressed in the report, we speculate that the idea that many stereotypes are accurate would be anathema to this group, and that no amount of evidence could convince them otherwise (we doubt many would even consider the evidence).

Political biases can produce distorted bodies of scholarship through a variety of routes. It impacts who becomes an academic or scientist. The more academia is (rightly) viewed as a hotbed of left-wing activism, the less appealing it may become for scholars who hold different views. Notable percentages of faculty indicate explicit willingness to discriminate against those in their ideological outgroups, and ideological minorities report experiences of hostility, stigmatization, and a lack of belonging. It should not come as a surprise, then, that non-liberals – or, really, anyone whose work seems to contest narratives that are sacred to left and far-left faculty – are overlooked in hiring decisions, or self-select out of pursuing academic careers.

Political bias also manifests in the questions researchers ask, how they measure what they’re studying, and how they interpret findings. Researchers generally have large latitude in the topics they choose to study and investigate. Surely, however, radicals and activists will tend to ask different questions than centrists, libertarians, conservatives, and the apolitical.

Measurement and interpretation may also fall victim to political biases for similar reasons, as concepts and findings are filtered through one’s personal worldview. Ideas that are contested in the wider society (value of affirmative action, the importance of microaggressions), but widely shared by those on the left may become normative and treated as “well-established scientific facts” among those in the bubble that is the academic left, even in the absence of solid or rigorous evidence. As such, asking skeptical questions about or drawing conclusions that conflict with these social norms may be inconvenient because such actions draw the ire of one’s colleagues and may result in condemnation or denunciation. Academia operates as a social-reputational system whereby an individual’s success hinges largely on the favorable evaluations of colleagues. As such, there are strong incentives for doing work that will lead to social approval from others (and, especially, avoiding work that will garner disapproval from peers). If colleagues might reject or vehemently disagree with one’s findings, there may be a strong incentive for self-suppression of those findings out of fear of punishment. If one elects not to self-suppress, one risks being denounced, ostracized and possibly even punished (through forced retraction, deplatforming, or even firing). Although more severe punishments, such as firing, are rare, we suspect that even the public shaming that comes with a forced retraction or deplatforming will often be sufficient to persuade a great many scholars to never again go public with a similar idea or finding.

Furthermore, this problem of self-censorship of findings that make sense in the wider world but are anathema to leftwing academics, especially the large minority of activists, creates further problems downstream from research. That is, if researchers conduct a study that could produce a finding unpopular in academia but which would be welcome elsewhere (e.g., that preferential selection forms of affirmative action are ineffective), the researchers are now in an ethical bind. They have two choices, both bad: 1. Try to publish the study and risk denunciation, protracted battles to get the paper published and not retracted, and possibly even firing; or 2. Suppress it, which would be scientifically unethical, even though it would be politically safe. There is a simple solution: Do not study such topics. This entirely eliminates the ethical quandary from the researchers’ standpoint but is obviously no way to conduct a society that prefers to evaluate its practices, policies, and interventions based on scientific evidence rather than political popularity.

Finally, research may be subject to political bias in the form of selective citations. The easiest way for researchers to ignore inconvenient or disliked findings is to not cite or discuss them. Bias can occur when work is cited more frequently based on its political content rather than scientific quality. Work that is highly cited will often enter the canon – the rarefied list of findings deemed to be “true with great certainty” and which get highlighted in major reviews and textbooks. Of course, work that is ignored never makes it into the canon. This is science working well when work is ignored because it has been refuted. But when work is ignored without having been refuted, this is science working poorly. This is precisely the case with stereotype accuracy. Scores of studies demonstrate at least moderate accuracy, but it is, to this day, far more common to see major reviews and textbooks repeat the naïve assumption that stereotypes are inaccurate, as if those scores of studies were never conducted.

Paradigmatic of this is a recent review in an outlet of record for psychology that concluded gender stereotypes are mostly inaccurate.16 Reaching this position required ignoring and failing to cite or consider 11 published papers reporting 16 separate studies that found gender stereotypes to range from moderately to highly accurate. Future research may end up refuting these 11 papers on gender stereotypes, and criticism of these papers and their findings should always be on the table—no scientific work should be beyond scrutiny. But, at present, the work has not been refuted. The relevant research was completely ignored, and a review claiming comprehensiveness and nuance should not be in the business of ignoring work that fails to conform to desired narratives and positions.

Testaments to the inaccuracy of stereotypes still dominate textbooks and reviews of stereotyping. To borrow an expression, it is a dead horse that is still up and trotting around. Many think of ‘science denial’ as something primarily characterizing right-wing disbelief in climate science and evolution. Apparently, however, it is alive and well in social scientists’ own resistance to the overwhelming evidence of accuracy and rationality in many people’s stereotypes.

We are going to end with a true story. A couple of years ago, one of us (Jussim) was having breakfast with some other very famous social psychologists after a conference. I can only report my lived experience. I had given a talk on the value of intellectual diversity in academia, and had briefly mentioned the work on stereotype accuracy. They did not like it, and, like many social psychologists before them, were criticizing it without refuting any of it. As it became clear that, unless they actually could refute my work I was not going to change my views about it, one of them said, and this is a close paraphrase, “But the Nazis relied on stereotypes.” Even though one of the most sure-fire ways to derail a nuanced and evidence-based conversation is to throw in “But Nazis!,” it’s not a completely ridiculous point; the Nazis did advance vicious propaganda that so dehumanized Jews that it paved the way for genocide. Indeed, as I and my collaborators reported in a 2020 study, crude images of Jews and slick antisemitic conspiracy theories can still be found in some of the more extreme networks that fester in nastier corners of social media.17

Recognizing how propaganda can promote and exploit pernicious stereotypes is important, and we are glad people are outraged by it. But what goes on in bleak corners of 4chan or Parler is, however disturbing, not representative of what the social science has discovered about what most people who have been studied think about most groups they have been asked about.

If people estimate the average daily high temperature in March in New Jersey, Nazis are not involved in figuring out whether they are right or wrong. Similarly, if people estimate the proportion of Black adults with college degrees, the standardized test scores of Jews, or the yearly income of male and female doctors, one can figure out how accurate they are by comparing their estimates to criteria.

Which gets us back to the evidence. If the overwhelming evidence does not change someone’s belief in the so-called ‘inaccuracy’ of stereotypes, what could? A foundational premise of the sciences is that they self-correct in the face of new evidence. Nothing in this essay should dissuade anyone from continuing efforts to combat discrimination, disinformation, and propaganda. We hope, however, that, with respect to the longstanding claim that ‘stereotypes are inaccurate,’ those who are willing to consider the actual evidence might be persuaded that a little scientific self-correction is long overdue.

References

Society for Personality and Social Psychology. Feb. 13, 2021. “Time for Change, Part II Creating a More Diverse, Methodologically Rigorous.” YouTube Video. Available at https://www.youtube.com/watch?v=64EQuhsAVb0.

Srivastava, Sanjay. Feb. 13, 2021. “Bringing the session home is @Ivuoma talking about moving beyond implicit bias in addressing lack of diversity in social psychology…” Twitter Post. Available at https://twitter.com/hardsci/status/1360663024135098368.

Snyder, Mark. 1977. “On the Self-Fulfilling Nature of Social Stereotypes.” Paper presented at the 1977 meeting of the American Psychological Association. Available at https://files.eric.ed.gov/fulltext/ED147435.pdf.

Lindsay, James, Peter Boghossian, and Helen Pluckrose. 2018. “From Dog Rape to White Men in Chains: We Fooled the Biased Academic Left with Fake Studies.” USA Today. Available at https://www.usatoday.com/story/opinion/voices/2018/10/10/grievance-studies-academia-fake-feminist-hypatia-mein-kampf-racism-column/1575219002/.

“Woozle Effect.” 2021. Wikipedia. Last modified 27 May, 2021. Available at https://en.wikipedia.org/wiki/Woozle_effect.

Jussim, Lee, Sean T. Stevens, and Nathan Honeycutt. 2019. “Unasked Questions About Stereotype Accuracy.” Archives of Scientific Psychology 6: 214-229.

Jussim, Lee, Jarret T. Crawford, Stephanie M. Anglin, John R. Chambers, Sean T. Stevens, and Florette Cohen. 2016. “Stereotype Accuracy: One of the Largest and Most Replicable Effects in all of Social Psychology.” In T. D. Nelson (Ed.), Handbook of prejudice, stereotyping, and discrimination. Psychology Press: pp. 31-63.

Richard, F. D., Charles F. Bond Jr. and Juli J. Stokes-Zoota. 2003. “One Hundred Years of Social Psychology Quantitatively Described.” Review of General Psychology 7(4): 331-363.

McCauley, Clark, and Christopher L. Stitt. 1978. An Individual and Quantitative Measure of Stereotypes. Journal of Personality and Social Psychology 36(9): 929-940.

Swim, Janet. 1994. “Perceived Versus Meta-Analytic Effect Sizes: An Assessment of the Accuracy of Gender Stereotypes.” Journal of Personality and Social Psychology 66(1) 23-36.

Westfall, Jacob, Leaf Van Boven, John R. Chambers, Charles M. Judd. 2015. "Perceiving Political Polarization in the United States: Party Identity Strength and Attitude Extremity Exacerbate the Perceived Partisan Divide.” Perspectives in Psychological Science 10(2): 145-158.

Kunda, Ziva and Paul Thagard. 1996. “Forming Impressions from Stereotypes, Traits, and Behaviors: A Parallel-Constraint-Satisfaction Theory.” Psychological Review 103: 284-308.

Rubinstein, Rachel S., Lee Jussim, and Sean T. Stevens. 2018. "Reliance on Individuating Information and Stereotypes in Implicit and Explicit Person Perception." Journal of Experimental Social Psychology 75: 54-70.

Jussim, Lee, Akeela Careem, Zach Goldberg, Nathan Honeycutt, Sean T. Stevens. 2021. “IAT Scores, Racial Gaps and Scientific Gaps.” In press. Chapter to appear in: Jon A. Krosnick, Tobias H. Stark, and A. L. Scott, (Eds.). The Future of Research on Implicit Bias. Cambridge University Press. Available at https://mfr.osf.io/render?url=https://osf.io/mpdx5/?direct%26mode=render%26action=download%26mode=render.

Kaufmann, Eric. 2021. “Academic Freedom in Crisis: Punishment, Political Discrimination, and Censorship.” Center for the Study of Partisanship and Ideology. Available at https://cspicenter.org/wp-content/uploads/2021/03/AcademicFreedom.pdf.

Ellemers, Naomi. 2018. “Gender Stereotypes.” Annual Review of Psychology 69: 275-298.

Finkelstein, Joel, Pamela Paresky, Alex Goldenberg, Savvas Zannettou, Lee Jussim, Jason Baumgartner, Congressman Denver Riggleman, John Farmer, Paul Goldenberg, Jack Donohue, Malav H. Modi. 2020. “Antisemitic Disinformation: A Study of the Online Dissemination of Anti-Jewish Conspiracy Theories.” Network Contagion Research Institute. Available at https://networkcontagion.us/reports/antisemitic-disinformation-a-study-of-the-online-dissemination-of-anti-jewish-conspiracy-theories/.

Very interesting article, but I wish you would have taken a step back to interrogate the etymology of the term and development of the concept. While the word dates (in French) to the 18th century and refers to a method of printing, the use of it as a metaphor in English dates to at least 1922 via Walter Lippmann.

But I believe it is far to ask - is the concept of "stereotype" as we now generally understand it even useful? Does it illuminate more than it obscures? I'm not sure that it is does. It is a recent development, not an ancient intuitive understanding, after all. It is essentially just another word for "generalization," except as your article ably outlines, it functions to cast disrepute on generalizations and suggest they are inherently bigoted as applied to humans. But we know what (true) generalizations about large sets can and can't tell us about individuals. The effort to foreclose the matter entirely is flatly anti-science.