Jobst Landgrebe is a German scientist and entrepreneur. He began his career as a Fellow at the Max Planck Institute of Psychiatry, then moved on to become a Senior Research Fellow at the University of Göttingen, working in cell biology and biomathematics. In April 2013, he founded Cognotekt, an AI based language technology company.

Barry Smith is Professor of Philosophy at the University at Buffalo, with joint appointments in the Departments of Biomedical Informatics, Neurology, and Computer Science and Engineering. He is also Director of the National Center for Ontological Research and Visiting Professor in the Università della Svizzera italiana (USI) in Lugano, Switzerland.

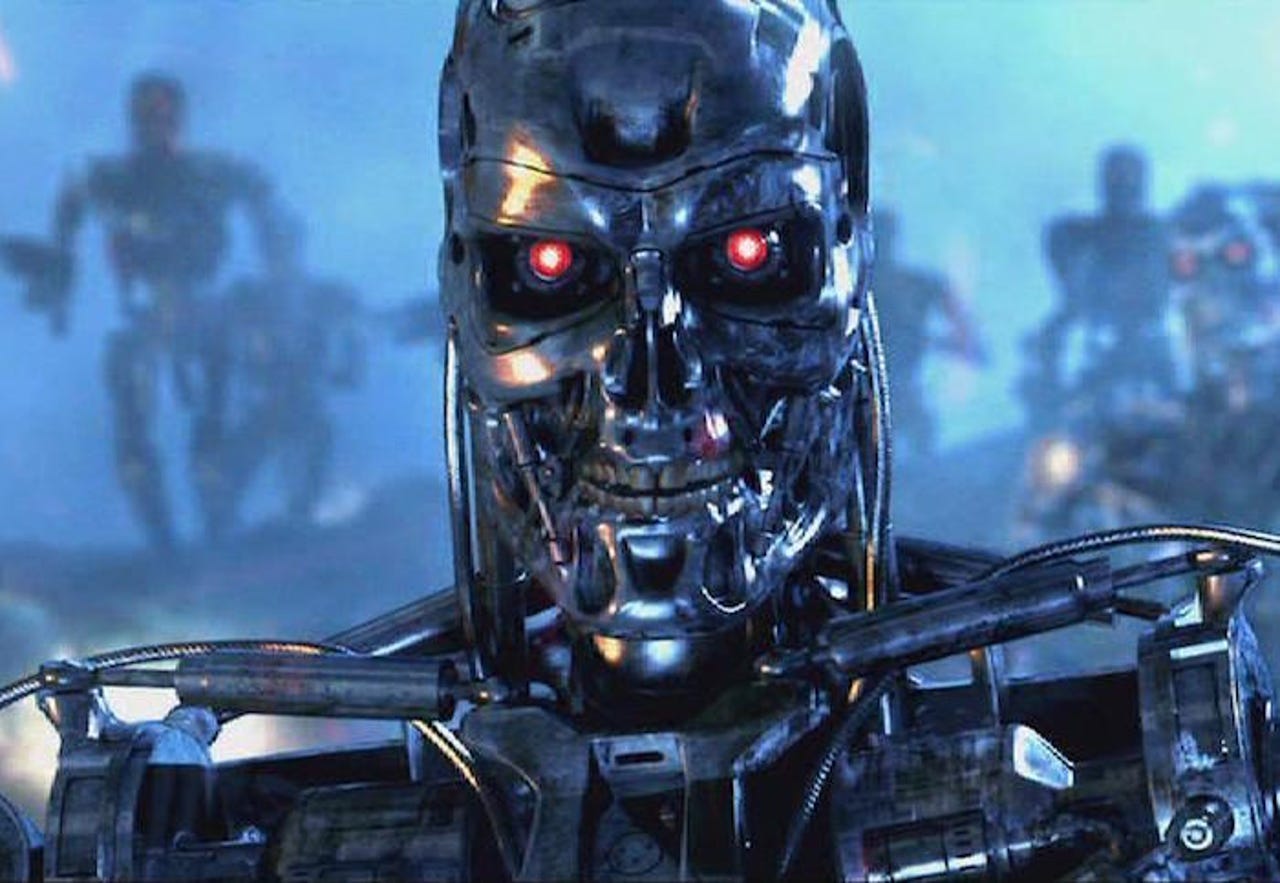

Landgrebe and Smith join the podcast to talk about their book Why Machines Will Never Rule the World: Artificial Intelligence without Fear. As the title indicates, the authors are skeptical towards claims made by Nick Bostrom, Elon Musk, and others about a coming superintelligence that will be able to dominate humanity. Landgrebe and Smith do not only think that such an outcome is beyond our current levels of technology, but that it is for all practical purposes impossible. Among the topics discussed are

The limits of mathematical modeling

The relevance of chaos theory

Our tendency to overestimate human intelligence and underestimate the power of evolution

Why the authors don’t believe that the achievements of Deep Mind, DALL-E, and ChatGPT indicate that general intelligence is imminent

Where Langrebe and Smith think that believers in the Singularity go wrong.

Listen in podcast form or watch on YouTube.

Links:

Rodney Brooks, “Intelligence without Representation.”

Nick Bostrom, Superintelligence.